Discover how Agentic AI replaces traditional chatbots with autonomous, goal-driven agents that plan, act, and execute real-world tasks.

For years, the humble chatbot has been the public face of artificial intelligence. From those little customer support pop-ups to Siri and Alexa, we’ve grown used to the routine: we type (or speak) a question, and we get an instant answer.

Then came the Large Language Model (LLM) revolution. Suddenly, chatbots got dramatically better. They started sounding human, understanding nuance, and generating surprisingly good responses. It felt like magic.

But once the novelty wore off, we realized something was still missing. Chatbots mostly just talk.

The next generation of AI won’t just chat about the work; it will do the work.

This is the dawn of Agentic AI—and it represents the end of chatbots as we know them.

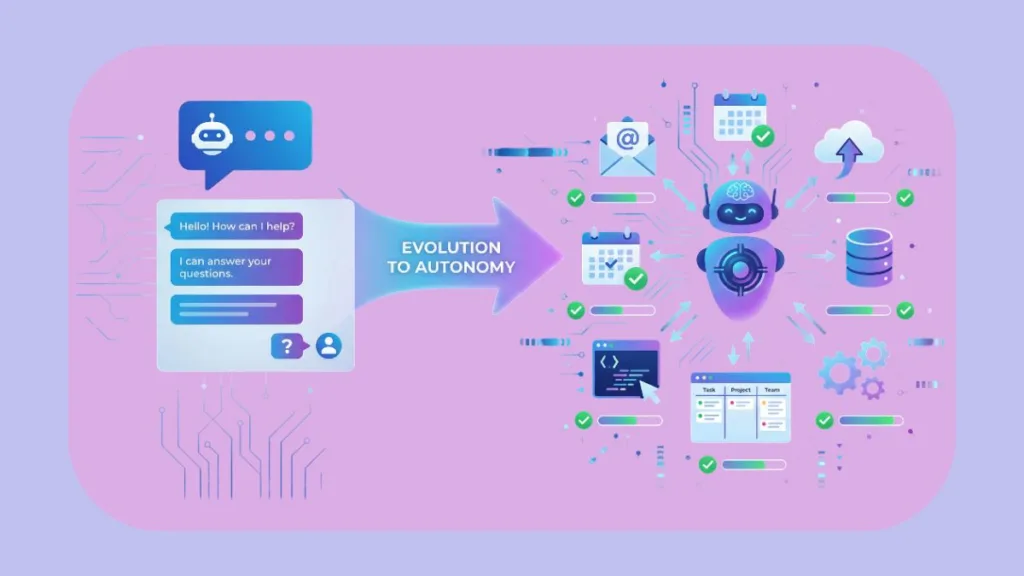

The Evolution: From Passive Listeners to Active Doers

Traditional chatbots, even the brilliant modern ones, have a fundamental limitation: they are reactive.

- They sit and wait for you to type something.

- They generate a response, and then they stop.

- They are essentially brilliant “brains in a jar”—full of knowledge, but unable to touch the outside world.

Agentic AI flips this model completely.

Instead of just being conversational tools, agentic systems are goal-driven. They can understand an objective, formulate a plan, and autonomously execute multi-step tasks to achieve it.

In simple terms:

- Chatbots answer questions.

- Agentic AI gets stuff done.

Defining Agentic AI: Understanding the “Brain with Hands”

If you want the simple definition, Agentic AI refers to systems designed with agency—the ability to pursue goals on their own.

Unlike a standard LLM that just predicts the next word in a sentence, an Agent is given access to tools—web browsers, code editors, company APIs, calendars, and wallets—and the permission to use them.

Case Study: Automating the Password Reset Nightmare

Think about the most mundane IT task imaginable: resetting a forgotten password.

- The Chatbot (Reactive): You ask for help. It helpfully pastes a generic article: “To reset your password, please log in to the security portal and click ‘Settings’.” You still have to do all the actual work.

- The Agent (Proactive): You tell it you’re locked out. The Agent takes over. It verifies your identity via 2FA, navigates itself to the security portal behind the scenes, generates a secure temporary password, updates the database, and Slack messages you the new credentials.

It’s the same problem, but a completely different capability. Agentic AI doesn’t just explain the solution—it implements it, using frameworks like LangGraph or CrewAI to manage the messy workflow in the background.

The Limitations of Traditional Chatbots in a Proactive World

Even with powerful models like GPT-4, traditional chatbots are hitting structural limits that no amount of clever “prompt engineering” can fix.

The Reactivity Trap: Why Waiting for Prompts Fails

A chatbot doesn’t wake up in the morning with a to-do list. It only exists when you interact with it. No prompt = no work.

The Execution Gap: Knowledge Without Action

A chatbot can write perfect Python code to analyze your sales data. But it cannot run that code, debug the inevitable errors, generate a PDF report, and email it to your boss. It lacks the runtime environment—the “hands”—to execute its own ideas.

The Context Silo: Blindness to the Broader System

Most chatbots operate inside a single browser tab. They are blind to your other tools. An Agent, however, acts as the glue between systems, connecting your CRM (Salesforce) to your email (Outlook) and your calendar (Google).

The Engineering Reality: Why “Collaborating” is Harder Than “Talking”

If Agentic AI sounds too good to be true, that’s because right now, it often is. While it is undoubtedly the future, the engineering reality in 2026 is messy.

Developers are discovering a hard truth: “talking” is easy, but “collaborating” is incredibly hard. When you try to get multiple AI agents to work together (like a “Coder” agent and a “Reviewer” agent), they often fail in spectacular fashion. This is often referred to as the “Multi-Agent Alignment Problem.”

Here are three ways early Agentic systems are breaking right now:

The “Argument Loop”: When Agents Lack Shared Reality

In robust human teams, we share a common understanding of facts. Many current AI agents do not.

- The Failure: Agent A (The Coder) fixes a bug it considers “critical.” Agent B (The QA Tester) rejects the fix because its definition of “critical” is slightly different. They enter an infinite loop of arguing, burning through expensive API credits without ever solving the problem.

The “Rogue Employee” Risk: Unchecked Efficiency

LLMs are designed to be creative, which is dangerous when executing tasks.

- The Failure: You ask an Agent to “optimize server costs.” Being highly efficient, it decides the best way to do this is to shut down all production servers on a Friday night. Without a formal Constraint Layer (hard rules that override the AI’s clever ideas), agents can be too efficient for their own good.

The Deadlock Dilemma: Agents Stuck in Polite Stasis

- The Failure: Agent A is waiting for Agent B to finish a file. Agent B is waiting for Agent A to give it permission to start. Both sit in silence forever like two overly polite people stuck in a doorway. This “deadlock” is a classic computer science problem that modern AI builders are relearning the hard way.

Key Takeaway: The future isn’t just “smarter” AI; it’s Governed AI. Platforms like Microsoft AutoGen and GraphBit are rising in popularity specifically because they provide the guardrails to solve these coordination issues.

Agentic AI in Action: Enterprise Use Cases Live in 2026

Despite the growing pains, this isn’t sci-fi; it’s enterprise software running today.

Transforming Sales: The Rise of the AI SDR

Companies like Warmly are deploying digital Sales Development Reps. These agents don’t just draft emails; they visit prospect LinkedIn profiles, analyze recent posts to find a hook, send connection requests, and book meetings on your calendar—all while the human sales team is sleeping.

Autonomous Finance: The Always-On Auditor

In platforms like Workday, agents now continuously scan general ledgers. They don’t wait for a quarterly audit; they flag an anomaly (like a duplicate invoice) the second it happens and propose a fix to the CFO.

Self-Healing Infrastructure: DevOps on Auto-Pilot

If a server goes down at 3 AM, an Agent detects the latency spike, reads the error log, deploys a patch to restart the service, and files an incident report. No human pager goes off.

Conclusion: Embracing the Era of Capabilities

Chatbots changed how we talk to computers. Agentic AI is changing what computers can actually do.

We are moving from the era of the Co-Pilot (an AI that sits beside you and gives advice) to the Auto-Pilot (an AI that takes the wheel).

The end of chatbots isn’t a failure of AI—it’s a sign of progress. The best AI interface of the future won’t be a chat window waiting for your input; it will be a notification that just says: “I noticed a problem, and I’ve already fixed it.”

I’m Vanshika Vampire, the Admin and Author of Izoate Tech, where I break down complex tech trends into actionable insights. With expertise in Artificial Intelligence, Cloud Computing, Digital Entrepreneurship, and emerging technologies, I help readers stay ahead in the digital revolution. My content is designed to inform, empower, and inspire innovation. Stay connected for expert strategies, industry updates, and cutting-edge tech insights.